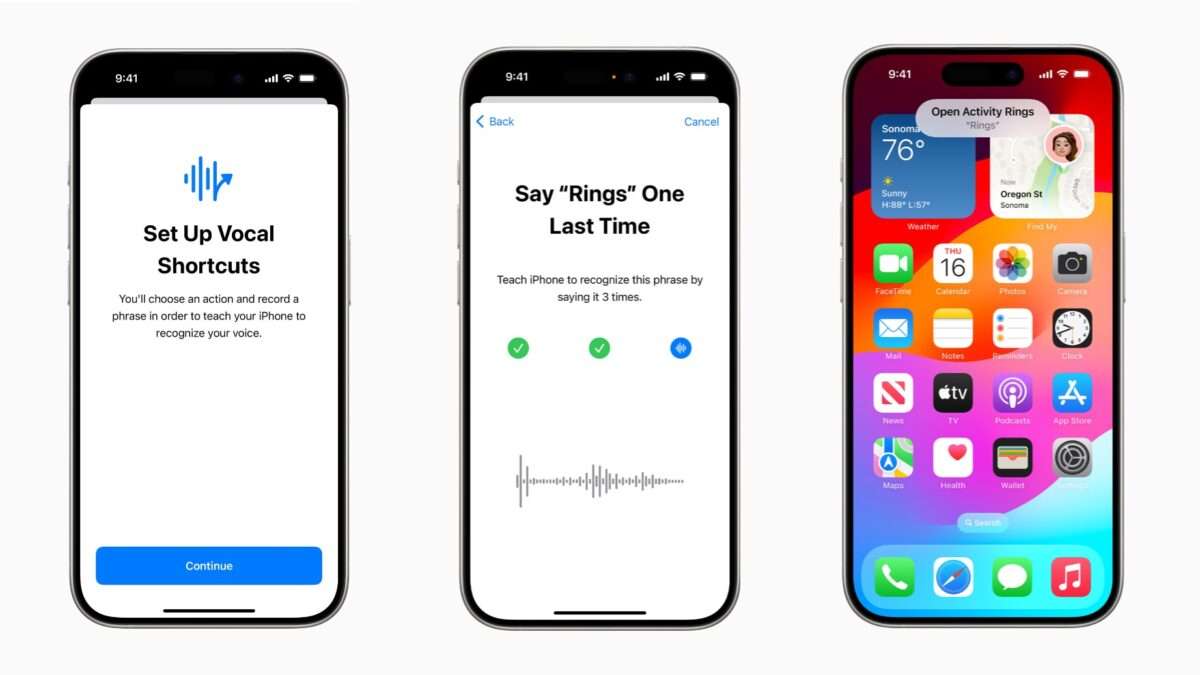

In their annual announcements for Global Accessibility Awareness Day (GAAD), Apple announced a teaser for iOS 18 for Vocal Shortcuts, a new feature that lets you create custom voice commands like Accessibility features and custom shortcuts, bypassing the need for a “Siri” trigger phrase and using the vocal shortcut itself as the trigger.

Currently, Shortcuts does already allow users to run their shortcuts by speaking the custom name of the shortcut to Siri – however, it requires an activation phrase (or pressing the physical button).

Now, with Vocal Shortcuts as a feature, they can bypass the trigger entirely and use their Vocal Shortcut name as the complete trigger + action command. For example, rather than saying “Siri, turn the lights to 100%,” users could create a shortcut that sets the lights accordingly, and create a vocal shortcut to trigger anytime you say “Light Bright” – even without saying Siri first.

Here’s how Apple describes it in their announcement, alongside the Listen for Atypical Speech feature:

With Vocal Shortcuts, iPhone and iPad users can assign custom utterances that Siri can understand to launch shortcuts and complete complex tasks. Listen for Atypical Speech, another new feature, gives users an option for enhancing speech recognition for a wider range of speech. Listen for Atypical Speech uses on-device machine learning to recognize user speech patterns. Designed for users with acquired or progressive conditions that affect speech, such as cerebral palsy, amyotrophic lateral sclerosis (ALS), or stroke, these features provide a new level of customization and control, building on features introduced in iOS 17 for users who are nonspeaking or at risk of losing their ability to speak.

Plus, Vocal Shortcuts can be assigned to any Accessibility feature, no shortcut required – adding in Shortcuts lets users take the feature to the next level and execute multiple actions, not just the one pre-assigned command.

In many ways, Apple has been learning from features introduced in the past to make this possible – the introduction and improvements to Sound Recognition have surely helped, as well as the recent dropping of “Hey” from “Hey Siri.”

Check out the rest of Apple’s announcements for Global Accessibility Awareness Day, including the very cool Eye Tracking and and Music Haptics coming this year.