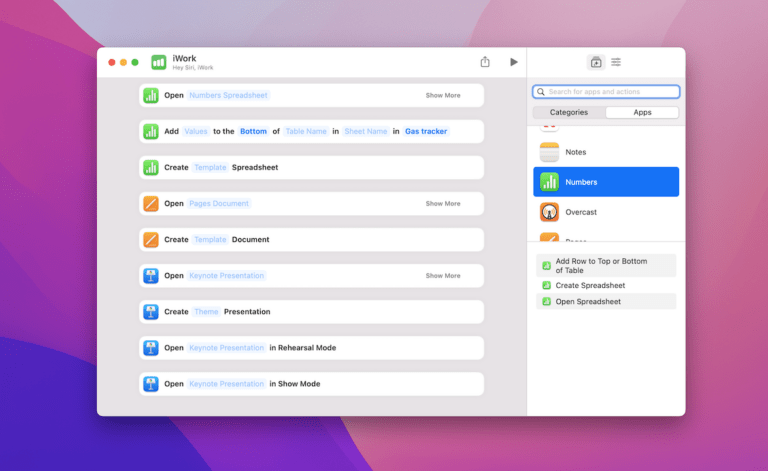

At WWDC25, Apple expanded access to their Foundation Models to third-party developers, making intelligence features easier to implement while maintaining privacy.

With the framework, developers are able to access local, on-device models from Apple, make requests to Private Cloud Compute when needed, and can readily adopt tools like the Vision framework or SpeechAnalyzer.

In introducing these capabilities, Apple has produced the following Machine Learning & AI sessions:

Apple Developer sessions on Machine Learning & AI from WWDC2025

Intro

Foundation Models

- Meet the Foundation Models framework

- Deep dive into the Foundation Models framework

- Code-along: Bring on-device AI to your app using the Foundation Models framework

- Explore prompt design & safety for on-device foundation models

MLX

Features

- Dive Deeper into Writing Tools

- Read documents using the Vision framework

- Bring advanced speech-to-text to your app with SpeechAnalyzer

More

- Optimize CPU performance with Instruments

- Combine Metal 4 machine learning and graphics

- What’s new in BNNS Graph

Explore all the Machine Learning & AI sessions from WWDC25, plus check out my recommended viewing order for the App Intents sessions.

P.S. Here’s the full list of sessions, no sections – copy these into your notes:

List of Apple Developer sessions on Machine Learning & AI from WWDC2025

- Discover machine learning & AI frameworks on Apple platforms

- Meet the Foundation Models framework

- Deep dive into the Foundation Models framework

- Code-along: Bring on-device AI to your app using the Foundation Models framework

- Explore prompt design & safety for on-device foundation models

- Explore large language models on Apple silicon with MLX

- Get started with MLX for Apple silicon

- Dive Deeper into Writing Tools

- Read documents using the Vision framework

- Bring advanced speech-to-text to your app with SpeechAnalyzer

- Optimize CPU performance with Instruments

- Combine Metal 4 machine learning and graphics

- What’s new in BNNS Graph